Summary

Multithreading is a technique that allows for concurrent (simultaneous) execution of two or more parts of a program for maximum utilization of a CPU. So here we’ll understand how multiple tasks are actually handled and executed by a CPU.

In this article we’ll be understanding the basics of

- Covering Basics: Program, Process, Threads

- Single vs Multi thread process

- How Multithread process is managed and executed (Concurrency vs. Parallelism)

- Amdahl’s Law and how you can speed up your applications

Covering Basics: Program, Process, Threads

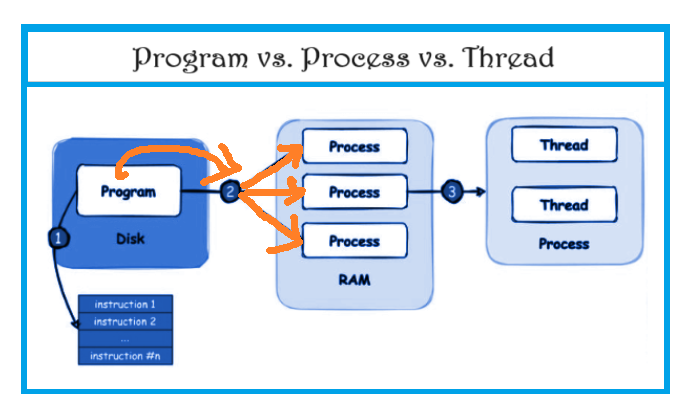

Program

A program is a static set of instructions written in a programming language. It’s stored in a file on disk and includes code, data, and resources that the program uses. They are just files on the disk until they are executed. They don’t consume system resources until they become processes.

Processes

Processes are instances of programs that are currently executing. The OS manages these processes, allocating memory and CPU time to each, and ensuring they don’t interfere with each other. Processes can run concurrently, and the OS uses process scheduling to manage this.

fun fact: when we say “start the server (via npm start or python server.py etc) we actually mean “start the server process”

Threads

Threads are the smallest units of execution within a process. A process can contain multiple threads, each of which can run independently while sharing the process’s resources like memory and file handles. Threads within the same process can communicate with each other more easily and efficiently than separate processes can

Single vs Multi thread process

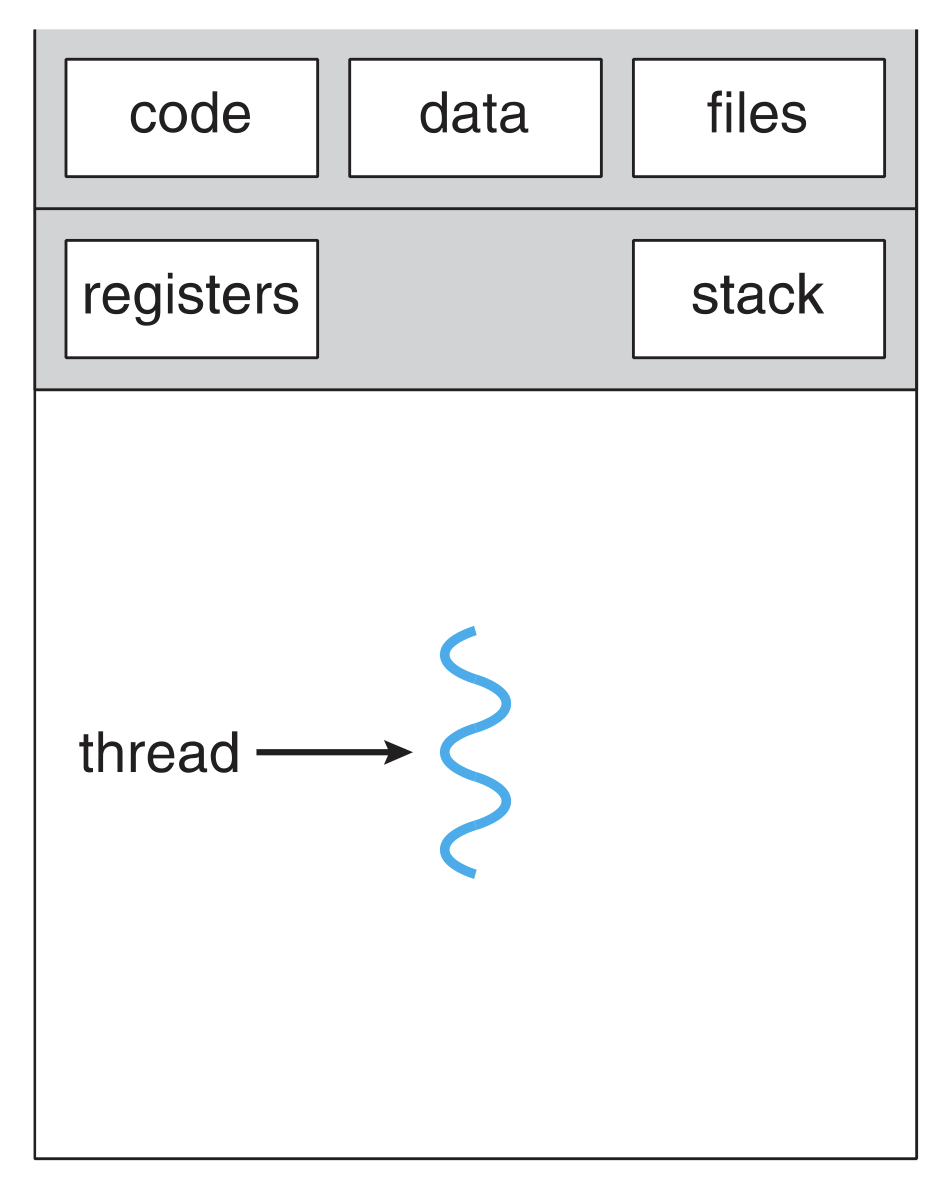

Single-Threaded Process

A single-threaded process is a process that has only one thread of execution. This means it can perform only one task at a time. All instructions are executed sequentially, one after another.

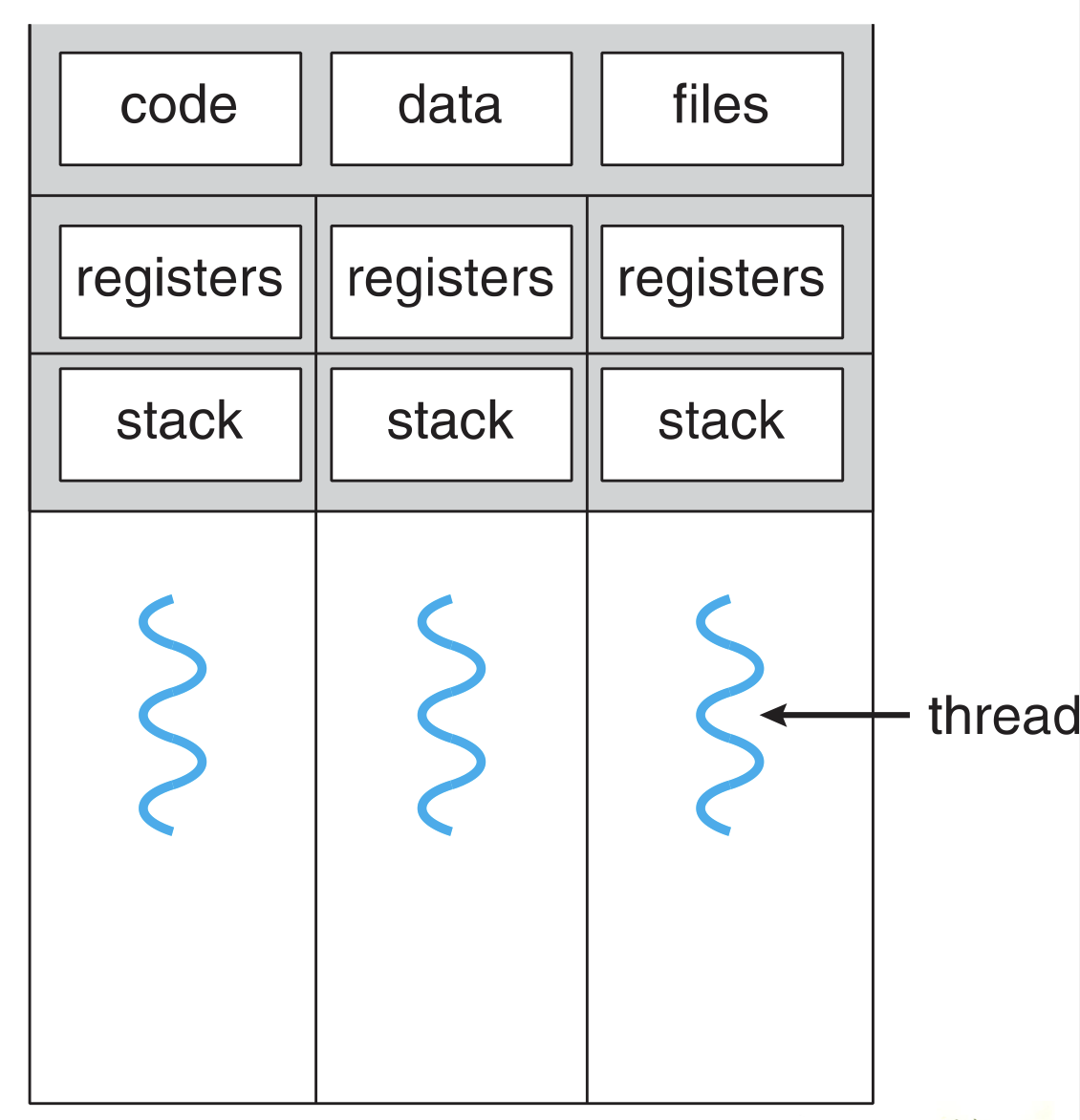

Multi-Threaded Process

A multi-threaded process is a process that contains multiple threads of execution. Each thread can perform different tasks concurrently, sharing the same process resources (like memory and file handles).

Imagine your operating system (OS) as a busy kitchen in a restaurant:

- Program: The recipe book with all the instructions for preparing various dishes (the executable file and instructions).

- Process: When the kitchen starts preparing meals, it’s like starting the backend server process. The kitchen (process) has its own space and resources, and it’s responsible for preparing meals.

- In Single thread: The kitchen has only one chef who handles all tasks sequentially:

- The chef handles a specific order (processing a client request).

- The chef then prepares ingredients in the background (data preprocessing).

- The chef manages the inventory (database interactions).

- The chef records the orders in a log book (logging).

- In Multi threads: The kitchen has multiple chefs, each handling different tasks concurrently:

- One chef handles a specific order (a thread handling a client request).

- Another chef prepares ingredients in the background (a background thread performing tasks like data preprocessing).

- A third chef manages the inventory (a thread handling database interactions).

- A fourth chef records the orders in a log book (a thread for logging).

How Multithread process is managed and executed (Concurrency vs. Parallelism)

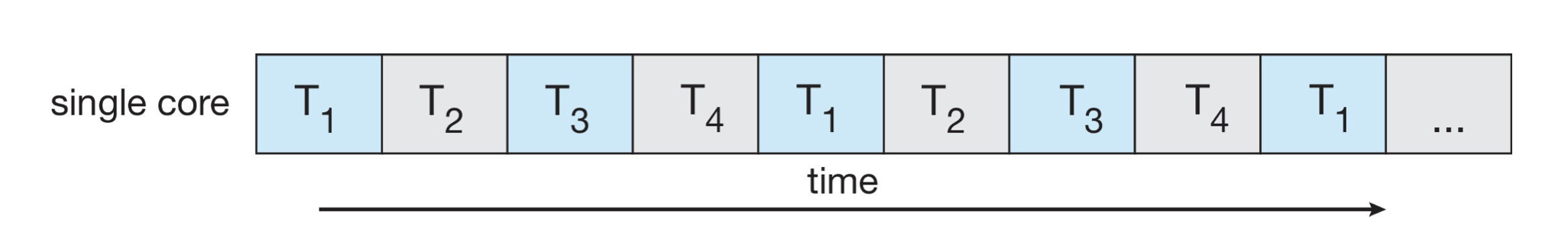

Concurrency

Concurrency is the ability to handle multiple tasks at the same time by switching between them. The tasks might not actually run simultaneously, but the system manages them in such a way that they make progress over the same period. Concurrency is about dealing with lots of things at once and is often managed by time-slicing, where the CPU switches between tasks rapidly.

Example: Imagine a single cashier at a grocery store who is helping multiple customers by quickly switching between them. The cashier starts to scan items for Customer A, then pauses to help Customer B, then goes back to Customer A, and so on. Both customers are being served concurrently, but not at the same time.

Parallelism

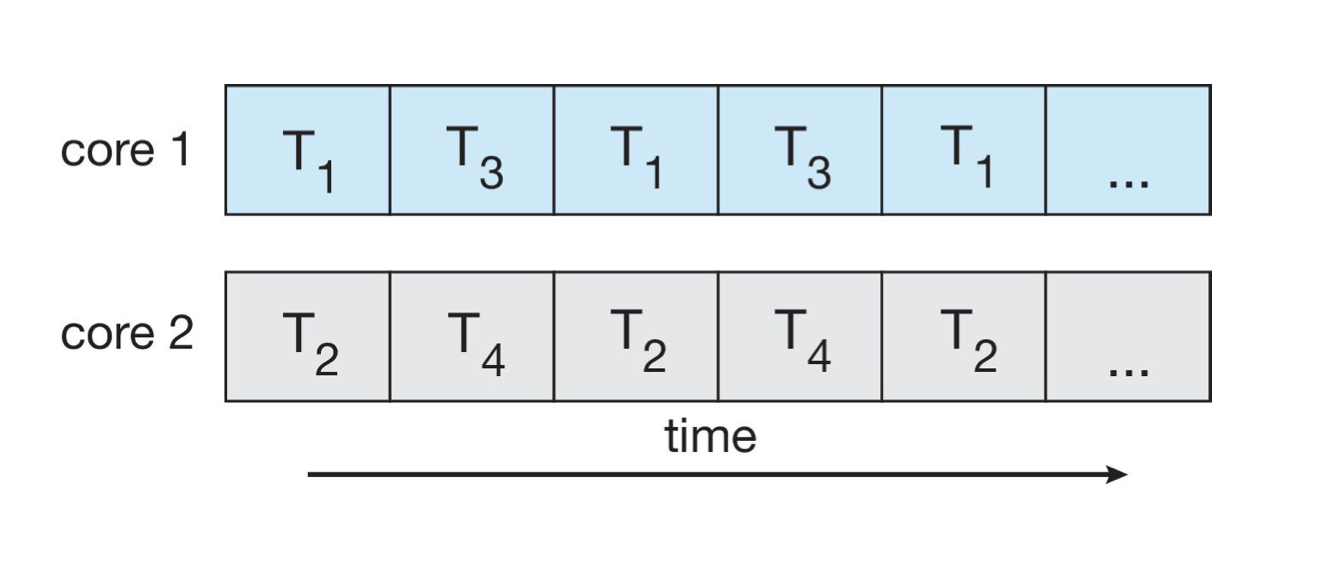

Parallelism is the simultaneous execution of multiple tasks. This is possible when there are multiple processing units (like CPU cores) available. Parallelism is about doing lots of things at once. In a parallel system, tasks actually run at the same time.

Example: Imagine there are two cashiers at the grocery store, each helping a different customer at the same time. Customer A is being helped by Cashier 1, while Customer B is being helped by Cashier 2. Both customers are being served in parallel.

Now handling multiple threads either way comes with their challenges.. you should not do it carelessly

Deadlock

Deadlocks happen when two or more threads aren’t able to make any progress because the resource required by the first thread is held by the second and the resource required by the second thread is held by the first.

Two people, Sakib and Shikhar, are trying to exit a room through opposite doors. Mechanism is such that only one door can be openned at a time. Shikhar is holding the handle of the left door, and Sakib is holding the handle of the right door. Each is blocking the other’s exit because they are both waiting for the other to move. Neither can open their door, resulting in a deadlock where neither can proceed.

Race conditions

Critical section is any piece of code that has the possibility of being executed concurrently by more than one thread of the application and exposes any shared data or resources used by the application for access.

Race conditions happen when threads run through critical sections without thread synchronization. The threads “race” through the critical section to write or read shared resources and depending on the order in which threads finish the “race”, the program output changes.

In a race condition, threads access shared resources or program variables that might be worked on by other threads at the same time causing the application data to be inconsistent.

Starvation

Other than a deadlock, an application thread can also experience starvation, where it never gets CPU time or access to shared resources because other “greedy” threads hog the resources.

Livelock

A livelock happens when two threads keep taking actions in response to the other thread instead of making any progress. The best analogy is to think of two persons trying to cross each other in a hallway. Shikhar moves to the left to let Sakib pass, and Sakib moves to his right to let Shikhar pass.

Both block each other now. Shikhar sees she’s now blocking Sakib and moves to his right and Sakib moves to his left seeing she’s blocking Shikhar. They never cross each other and keep blocking each other. This scenario is an example of a livelock.

Amdahl’s Law and how you can speed up your applications

Amdahl’s Law is used to determine the maximum improvement in performance when only a part of a system is improved. It is especially useful in parallel computing to understand the limits of parallelization.

Formula

Amdahl’s Law is given by the formula:

Where:

- ( S ) is the overall speedup of the system.

- ( P ) is the proportion of the program that can be parallelized.

- ( N ) is the number of parallel processing units.

- ( 1 - P ) is the proportion of the program that remains serial.

Basically tells us how much we can speed up our process by parallelizing the amount

Example

If 80% of a program can be parallelized (P = 0.80) and you use 4 processors (N = 4), the speedup is calculated as follows:

This means if you parallelize almost 80% of your tasks you can speedup your process by 2.5 times. That means if your process initially took 100ms to execute, it will take 40ms post 80% parallelization.

Thats all in this one folks.. let me know where i messed up in writing this :)